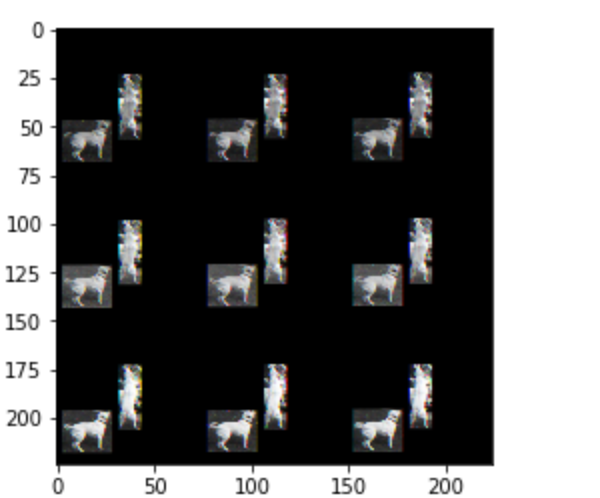

So let's say you have a 2x2 image with 3 color channels. In your particular case this means that consecutive values (that were basically values of the same color channela and the same row) are now interpreted as different colour channels.

#Permute pytorch how to

Note that these tensors or arrays are actually stored as 1d "array", and the multiple dimensions just come from defining strides (check out How to understand numpy strides for layman?). You then seem to reshape it to what you expect would be (height, width, channels) In pytorch you usually represent pictures with tensors of shape (channels, height, width) Which produces the following image as expected :-) By referring to this solution, I now get the following: image = images I think the issue now is with how to represent the image. Not sure if it is a Numpy thing or the way the image is represented and displayed? I would like to also kindly note that I get a different display of the image at every run, but it is pretty much something close to the image displayed below. So, after nice explanation, I have the following: image = imagesĪnd, the image looks as shown below. But, the image displayed looks as shown below. For instance, the dataset I have contains images of cats and dogs. The issue is that the image doesn't display properly. Inputs, labels = inputs.float(), labels.float() Inputs, labels = inputs.to(device), labels.to(device)

Train_loader = DataLoader(dataset,batch_size=1,shuffle=True)ĭevice = vice('cuda:0' if _available() else 'cpu')įor i, data in enumerate(train_loader,0):

#Permute pytorch code

They reside on the CPU! You'll have to transfer the tensor to a CPU, and then detach/expose the data structure.I have the following code portion: dataset = trainDataset() # array(, dtype=float32) GPU PyTorch Tensor -> CPU Numpy Arrayįinally - if you've created your tensor on the GPU, it's worth remembering that regular Numpy arrays don't support GPU acceleration. # RuntimeError: Can't call numpy() on Tensor that requires grad. You'll have to detach the underlying array from the tensor, and through detaching, you'll be pruning away the gradients: tensor = torch.tensor(, dtype=torch.float32, requires_grad= True) the requires_grad argument is set to True), this approach won't work anymore. However, if your tensor requires you to calculate gradients for it as well (i.e. CPU PyTorch Tensor with Gradients -> CPU Numpy Array This works very well, and you've got yourself a clean Numpy array. If your tensor is on the CPU, where the new Numpy array will also be - it's fine to just expose the data structure: np_a = tensor.numpy() So, why use detach() and cpu() before exposing the underlying data structure with numpy(), and when should you detach and transfer to a CPU? CPU PyTorch Tensor -> CPU Numpy Array Since PyTorch can optimize the calculations performed on data based on your hardware, there are a couple of caveats though: tensor = torch.tensor() Print(tensor_a.dtype) # torch.float32 print(tensor_b.dtype) # torch.float32 print(tensor_c.dtype) # torch.float32 Convert PyTorch Tensor to Numpy ArrayĬonverting a PyTorch Tensor to a Numpy array is straightforward, since tensors are ultimately built on top of Numpy arrays, and all we have to do is "expose" the underlying data structure. Naturally, you can cast any of them very easily, using the exact same syntax, allowing you to set the dtype after the creation as well, so the acceptance of a dtype argument isn't a limitation, but more of a convenience: tensor_a = tensor_a. Print(tensor_a.dtype) # torch.int32 print(tensor_b.dtype) # torch.float32 print(tensor_c.dtype) # torch.int32 Tensor_c = torch.tensor(np_array, dtype=torch.int32) # Retains Numpy dtype OR creates tensor with specified dtype from_numpy() and Tensor() don't accept a dtype argument, while tensor() does:

These approaches also differ in whether you can explicitly set the desired dtype when creating the tensor. This can also be observed through checking their dtype fields: print(tensor_a.dtype) # torch.int32 print(tensor_b.dtype) # torch.float32 print(tensor_c.dtype) # torch.int32 Numpy Array to PyTorch Tensor with dtype Tensor_a and tensor_c retain the data type used within the np_array, cast into PyTorch's variant ( torch.int32), while tensor_b automatically assigns the values to floats: tensor_a: tensor(, dtype=torch.int32) If we were to print out our two tensors: print( f'tensor_a: ') So, what's the difference? The from_numpy() and tensor() functions are dtype-aware! Since we've created a Numpy array of integers, the dtype of the underlying elements will naturally be int32: print(np_array.dtype)

0 kommentar(er)

0 kommentar(er)